Linear Logic

v

v

What mistakes does my model make?

Go beyond aggregated metrics and evaluate specific scenarios.

We're empowering engineers to pinpoint faltering models. Our mission is to help build models you can trust. Explore further to discover how.

Iterate to innovate

That's right, one must iterate to innovate! Consequently, we engineers spent way too much time building one-off UI charts to share model performances with stakeholders.

Prior to model training, ML engineers engage in data curation and sampling. Post-training, ML teams evaluate and benchmark model performance, followed by debugging upon deployment.

Linear Logic Datasets offers a single source of truth for data within your team.

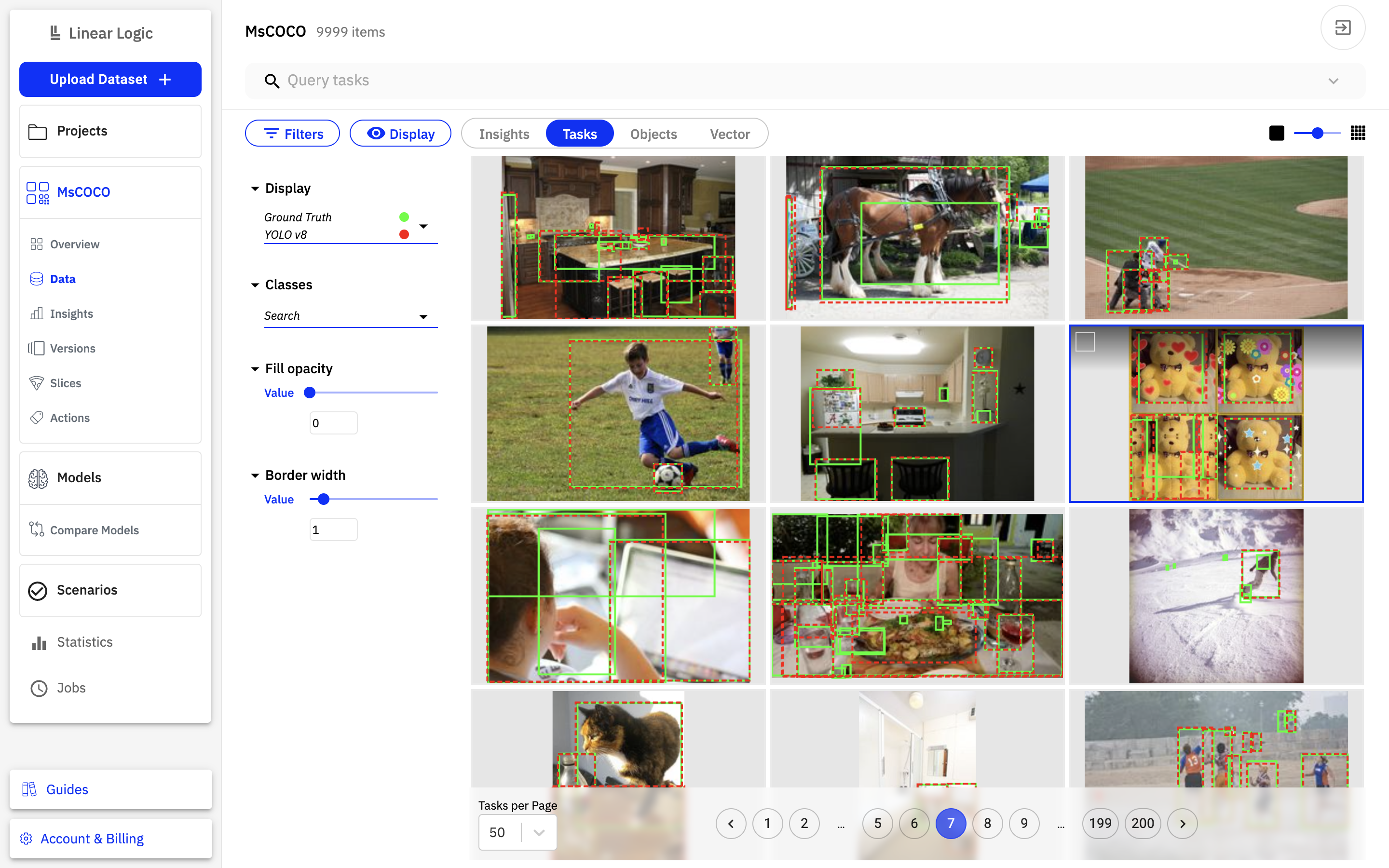

Bring together your data, labels and model predictions

Find model errors

Understand.

Gain understanding of model performance by extracting errors from a graphical interface. Identify areas of weakness in your model and assess their impact on reliability. Click on a scenario to query the underlaying data.

Analyze.

Assess the performance of your model at the object level to gain profound insights into its behavior. Pinpoint specific scenarios in which your model is susceptible to errors, enhancing your understanding of its strengths and weaknesses.

Act.

Upon recognizing weaknesses, it's time to enhance our model's performance significantly. Gather relevant training examples to rectify model errors and refine the next iteration of your model. Finally, we circle back to the Understanding phase, armed with enriched insights and a more resilient model.

Compare model runs

Data-driven iterations at ease.

Open Datasets

1 / 4

Better Data, Better AI.

It's time to get real.

Linear Logic

USA

251 Little Falls Drive

Wilmington, New Castle County

Delaware 19808

NETHERLANDS

Science Park 608, Unit K10

1098 XH Amsterdam